Facebook Open Graph at ekstrabladet.dk

(This post is a straight-up translation from Danish of a post on the Ekstra Bladet development blog)

Right before the 2010 World Cup started, ekstrabladet.dk (the Danish tabloid where I work) managed to get an interesting implementation of the new Facebook Open Graph protocol up and running. This blog post describes what this feature does for our users and what possibilities we think Open Graph holds. I will write a post detailing the technical side of the implementation shortly.

The Open Graph protocol involves adding mark-up to pages on your site so that Facebook users can ‘like’ them in the same way that you can like fan-pages on Facebook. A simple Open Graph implementation for a news-website might involve markup-additions that let users like individual articles, sections and the frontpage. We went a bit further and our readers can now ‘like’ the 700-800 soccer players competing in the World Cup. The actual liking works by hovering over linkified player-names in articles. You can try it out in this article (which tells our readers about the new feature, in Danish) or check out the action-shot below.

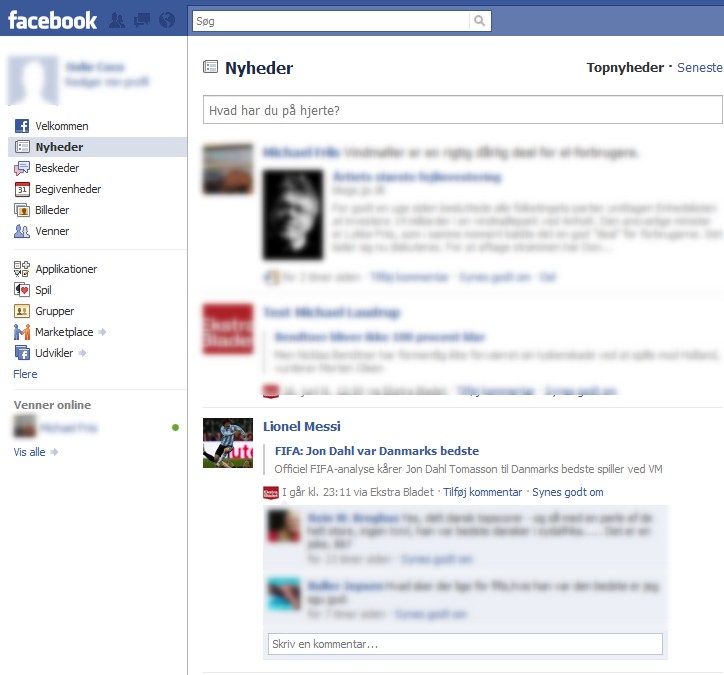

When a reader likes a player, Facebook sticks a notice in that users feed, similar to the ones you get when you like normal Facebook pages. The clever bit is that we at Ekstra Bladet can now — using the Facebook publishing API — automatically post updates to Facebook users that like soccer players on ekstrabladet.dk. For example “Nicklas Bendtner on ekstrabladet.dk” (a Danish striker) will post an update to his fans every time we write a new article about him, and so will all the other players. Below, you can see what this looks like in peoples Facebook feeds (in this case it is Lionel Messi posting to his fans).

Behind the scenes the players are stored using a semantic-web/linked-data datastore so that we know that the “Lionel Messi” currently playing for the Argentinian National Team is the same “Lionel Messi” that will be playing for FC Barcelona in the fall.

Our hope is that we can use the Open Graph implementation to give our readers prompt news about stuff they like, directly in their Facebook news feeds. We are looking at what other use we can make of this privileged access to users feeds. One option would be to give our users selected betting suggestions for matches that involve teams they are fans of (this would be similar to a service we currently provide on ekstrabladet.dk).

We have already expanded what our readers can like to bands playing at this years Roskilde Festival (see this article) and we would like to expand further to stuff like consumer products, brands and vacation destinations. We could then use access to liking users feeds to advertise for those product or do affiliate marketing (although I have to check Danish law and Facebook terms before embarking on this). In general, Facebook Open Graph is a great way for us to learn about our readers’ wants and desires and it is a great channel for delivering personalized content in their Facebook feeds.

Are there no drawbacks? Certainly. We haven’t quite figured out how to best let our readers like terms in article texts. Our first try involved linkifying the terms and sticking a small Facebook thumb-icon after it. Some of our users found that to ruin the reading experience however (even if you don’t know Danish, you might be able to catch the meaning of the comments below this article). Now the thumb is gone, but the blue link remains. As a replacement for the thumb, we are contemplating adding a box at the bottom of articles, listing the terms used in that article for easy liking.

Another drawback is the volume of updates we are pushing to our users. During the World Cup we might write 5 articles with any one player appearing in them over the course of a day and our readers may be subscribed to updates from several players. Facebook does a pretty good job of aggregating posts, but it is not perfect. We are contemplating doing daily digests to avoid swamping peoples news feeds.

A third drawback is that it is not Ekstra Bladet that is accumulating information about our readers, but Facebook. Even though we are pretty good at reader identity via our “The Nation!” initiative, we have to recognize that the audience is much larger when using Facebook. Using Facebook also gives us superb access to reader social graph and news feeds, something we could likely not built ourselves. A mitigating factor is that Facebook gives us pretty good APIs for pulling information about how readers interact with content on our site.

Stay tuned if you want to know more about our Facebook Open Graph efforts.